Overview– This report provides a very high-level overview of how Photon Insights generates unified summaries across many documents.

Core Summarization Capability:

Photon’s greatest strength is providing unified insights from a wide variety of sources (news journals, social media, SEC filings, earnings calls, etc.). Take a look at this sample, “Photon Insight” regarding Microsoft/ChatGPT – Photon produces an AI-generated summary (in a human-like tone) by taking certain elements of a set of articles. At a very high level, the proprietary Photon architecture leverages the power of large language models plus various classical supervised + unsupervised learning methods to generate the appropriate punchlines.

Performance Metrics (Warning, technical discussion to follow):

We are often asked, how much of the information do we capture from our summaries/how good are our summaries (both across many documents and on a per article basis)? We provide some rough metrics below, but want to note, evaluating summarization is extremely subjective (as evidenced by this paper: https://arxiv.org/pdf/2007.12626.pdf) – thus, even if our AI misses what you might deem as valuable information, we always link back to original sources/you can obtain more context if needed.

On a per document basis:

Based on ROUGE (Recall-Oriented Understudy for Gisting Evaluation) scores: Here are our ROUGE Scores relative to a few of the SOTA models (and higher is better).

| Metric/Model | Photon Proprietary Model | Yale-LILY/brio-cnndm-uncased | facebook/bart-large-cnn | google/pegasus-cnn_dailymail |

| ROUGE-1 | 61.14 | 41.44 | 38.85 | 35.54 |

| ROUGE-2 | 45.02 | 24.69 | 22.92 | 20.39 |

| ROUGE-L | 52.04 | 30.20 | 30.59 | 28.30, |

On the one hand, this looks great for us, we seem to be blowing others out of the water – this is actually not as impressive as it seems, as our models were trained based on our datasets (which we feel will result in succinct human-like summarizations)/as a result, the ROGUE scores should be higher. Nonetheless, we included such numbers due to several inquiring scientists, but we feel a better method of evaluation is doing a qualitative comparison provided in the next section.

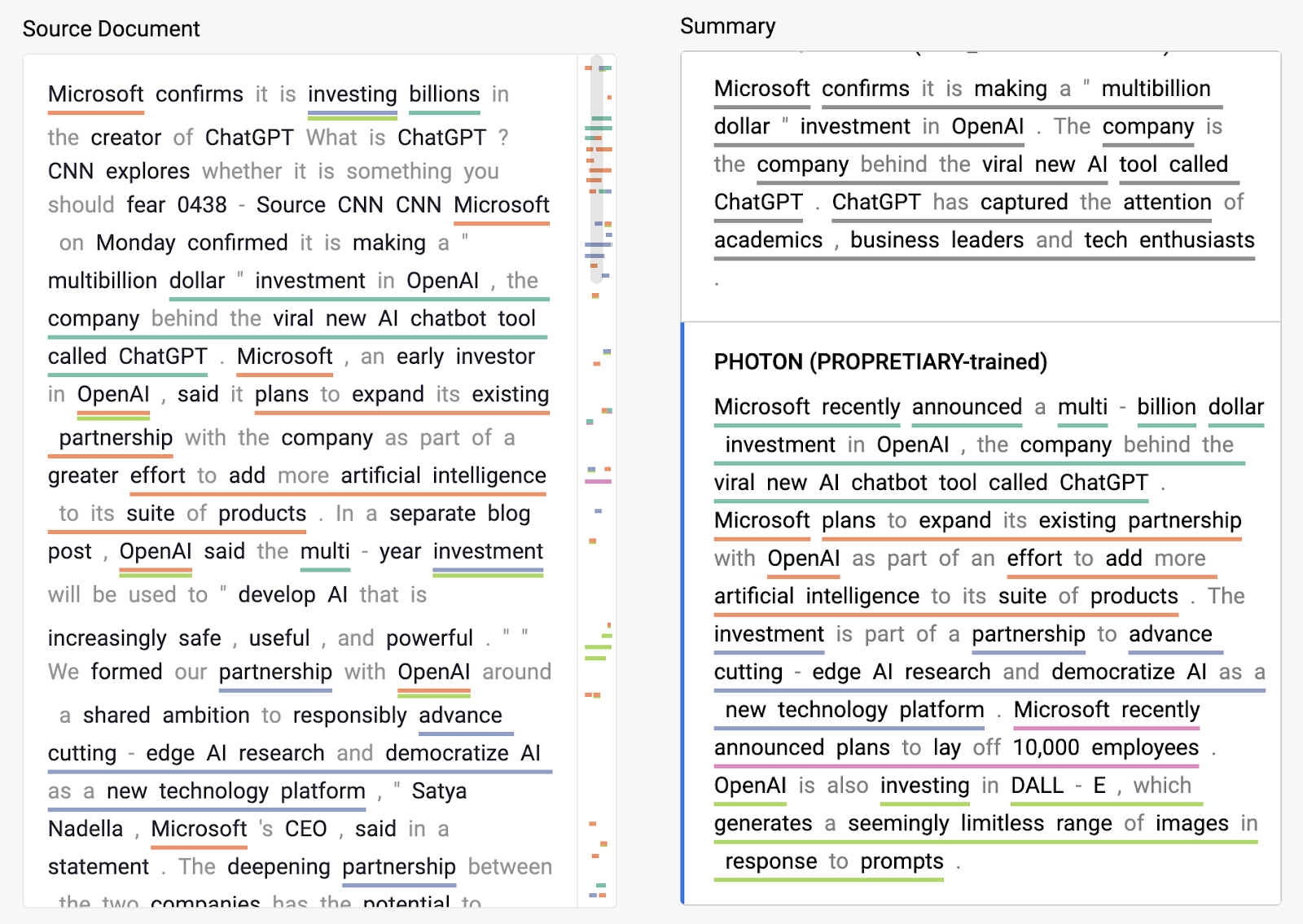

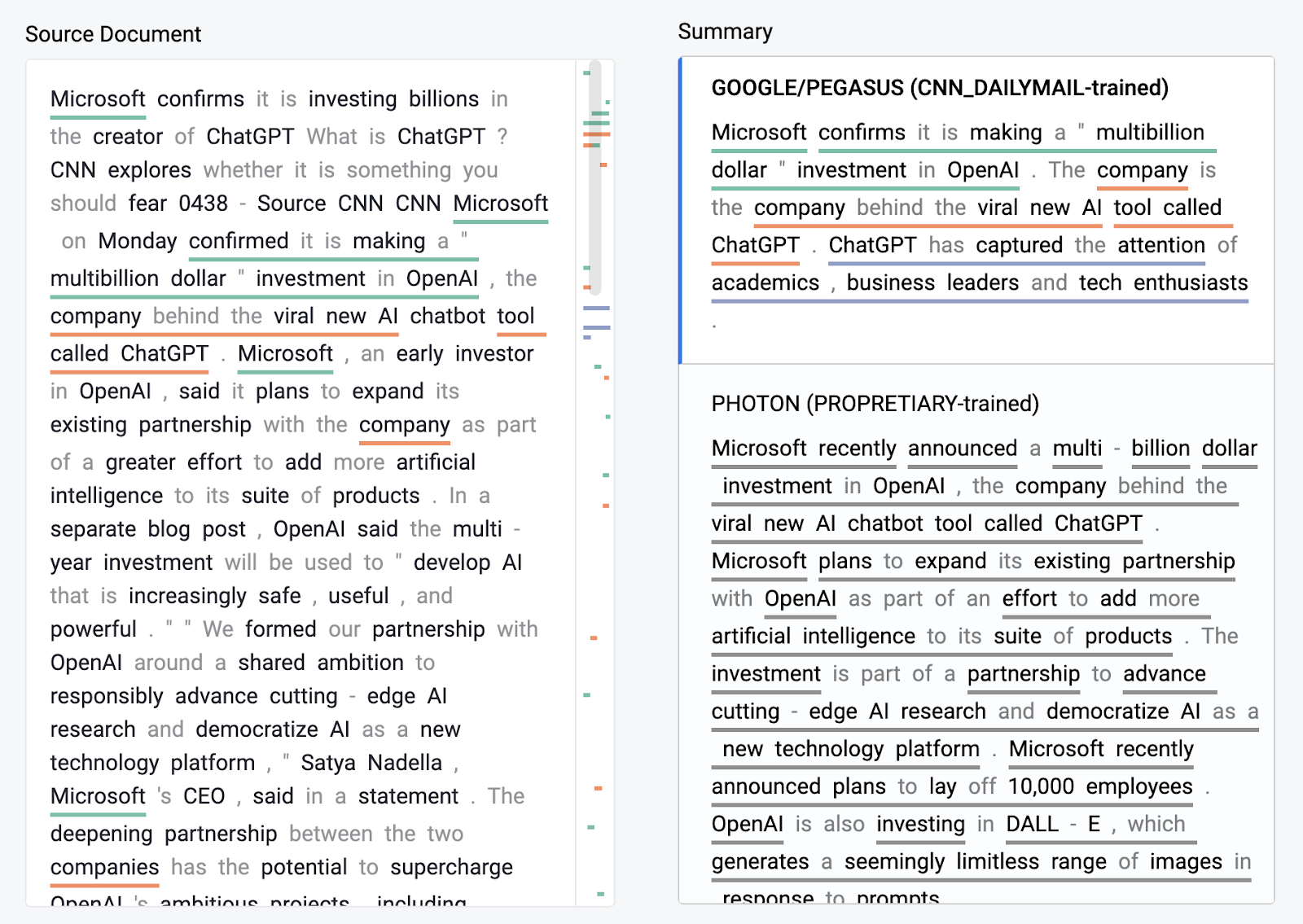

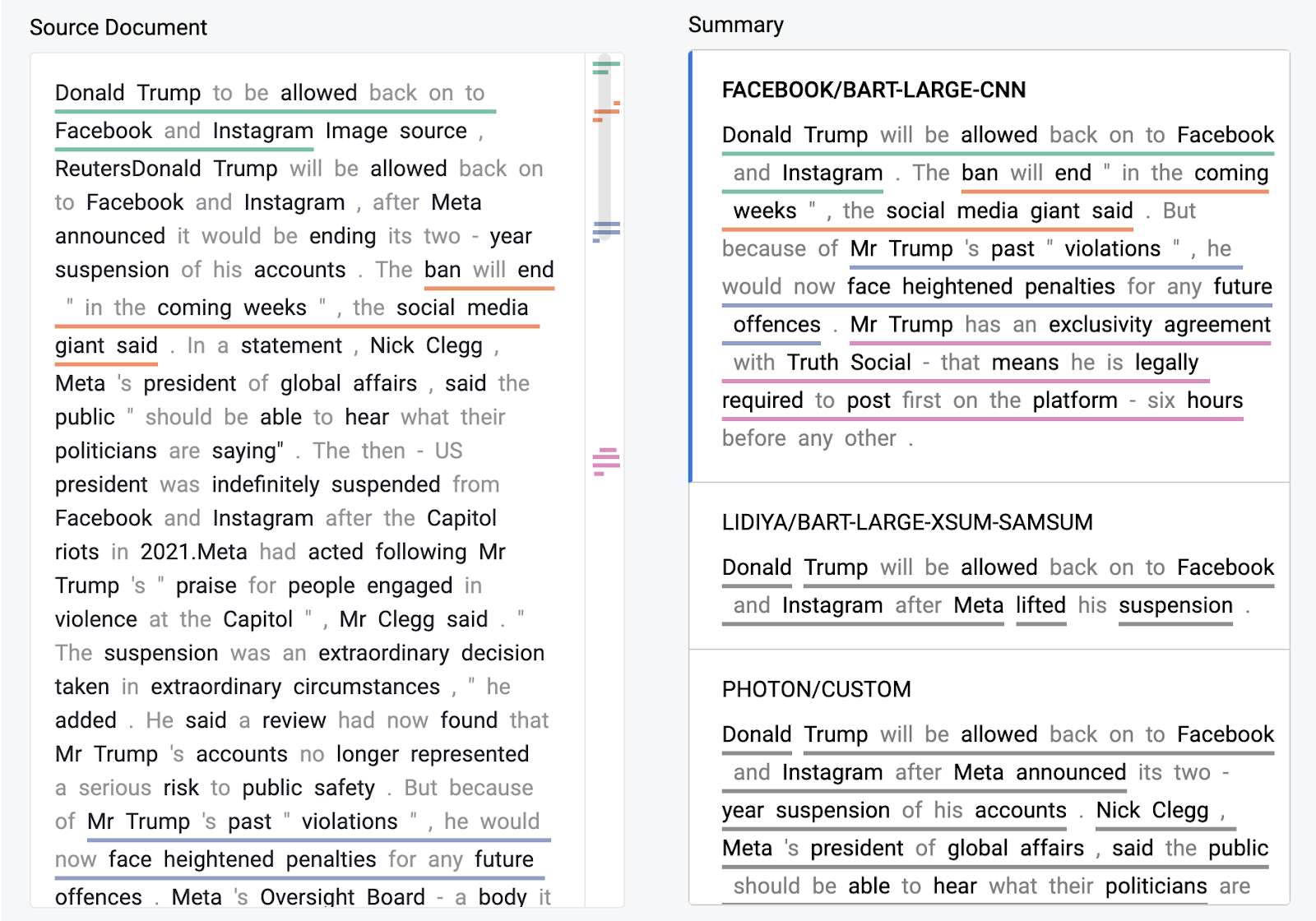

Qualitative comparison of models: Also, please see this post [INSERT LINK to CIR SUMMARIZATION POST] to see an application of our architectures to construct the CIR executive summary, and how it compares to other models. Most state-of-the-art language models, when given an article to summarize concisely, seem to be biased toward the information in the initial part of the text and perform poorly in extracting the information present in the lower parts of the inputted text – ideally, when we are reading a summary of any lengthy document/article, we need to grasp the whole picture, and we feel our proprietary models excel here.

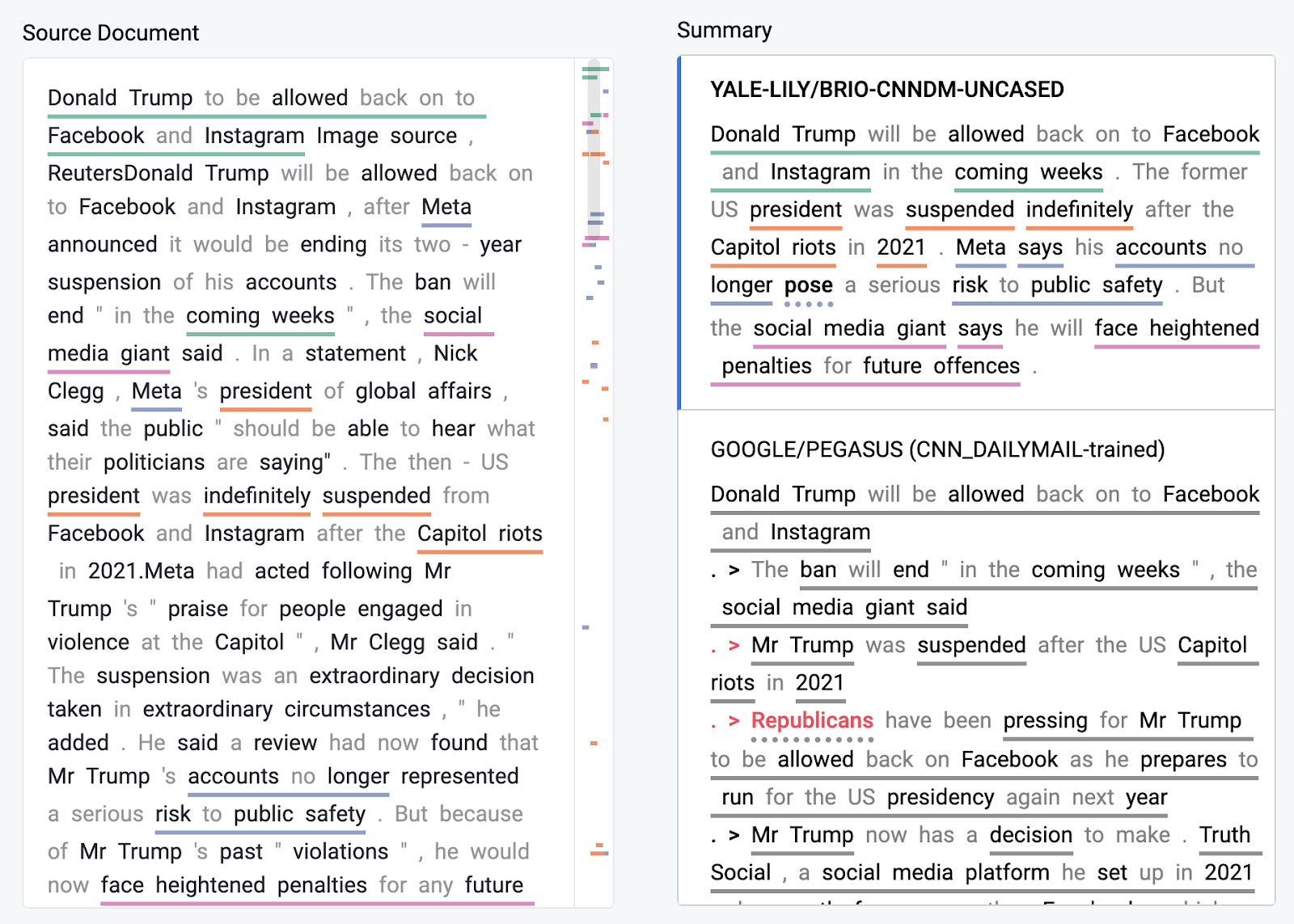

Thanks to the tool presented in [SummVis: Interactive Visual Analysis of Models, Data, and Evaluation for Text Summarization (Vig et al., ACL-IJCNLP 2021)] we visualize how much of different summaries are captured for different models – we are happy to provide live visualizations, and it’s clear the Photon algorithm captures a large amount of context, take for instance the sample visualizations below corresponding to this document (ARUL, CAN WE LINK THE SOURCE DOCUMENT). In Layman’s terms, to explain these visuals, more different colors denote a more extensive capture of the document.

Photon Algorithm:

Of all the listed models, ours is the only one to show the information about DALL-E (Image generation service developed by OpenAI and which was released well before the days of ChatGPT), which we felt was highly relevant to the context of the article, and the reader will hopefully appreciate the inclusion!

Google Pegasus:

Facebook Larg Bart (CNN):

BRIO:

A Cool GIF showing several models:

Subjective assessment of our unified framework:

People often ask – what percentage of the important insights do you capture? Unfortunately this is tough to answer (as evidenced by the summeval paper, it is a highly subjective task) – here we provide three examples of “Photons” from approximately 4 PM ET on 1/26/2023, and information we might have missed (and the good news with our Photon Insights is even if we miss something, we still reference back to the original articles plus tell you where exactly the summaries were derived from, so if you need more context, we provide it).

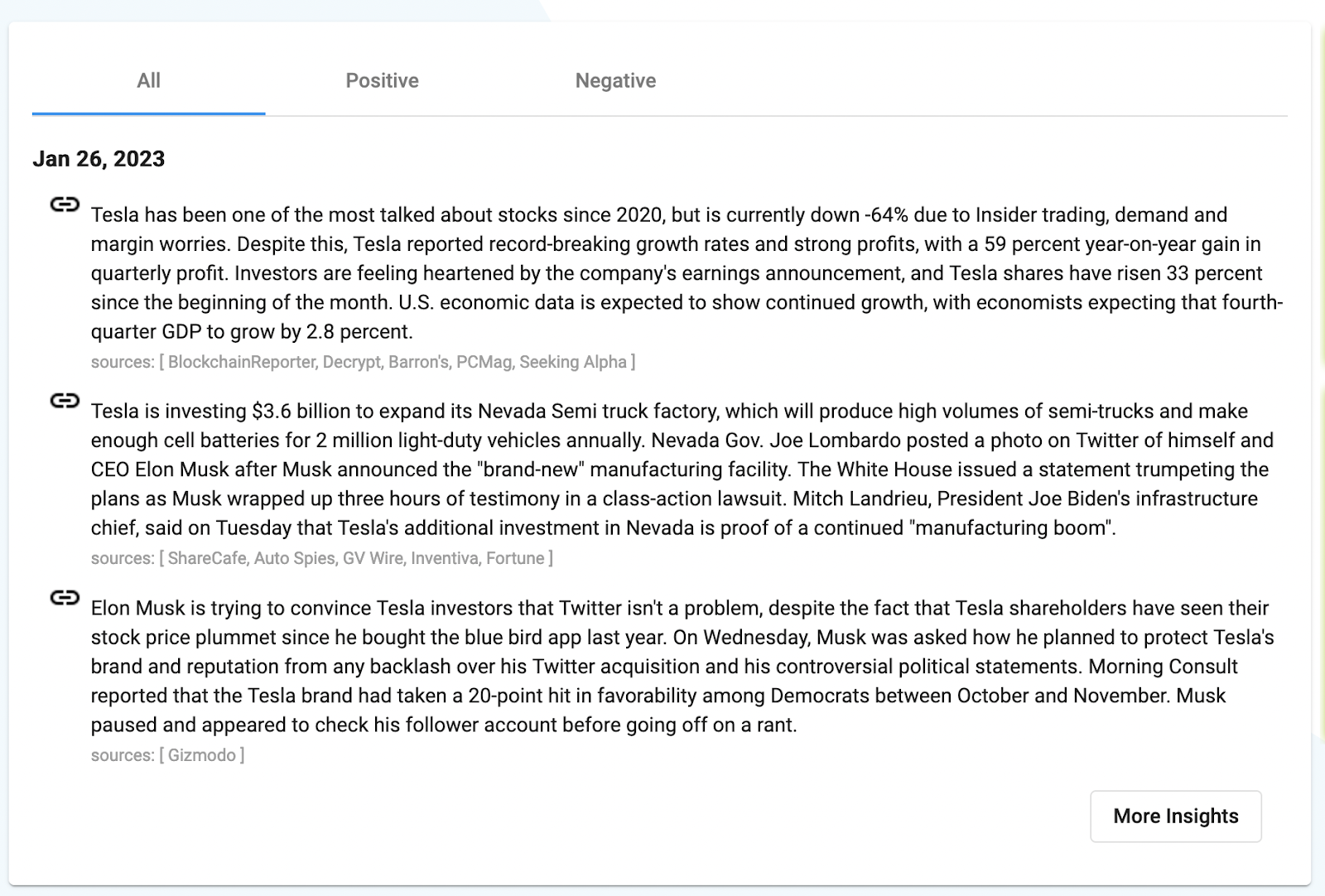

Example 1, Tesla:

Photon Insights:

Human generated core points from all articles:

1. Tesla reported strong profits, with a 59 percent year-on-year gain in quarterly profit and rising demand.

2. Tesla reported 405,278 vehicle deliveries globally and production of 439,701 vehicles, but its automotive gross margin fell to 25.9%.

3. Tesla continues to lose money on Bitcoin, but Elon Musk is still holding on

4. Tesla recently dropped prices on its entire lineup, including the Model Y, which was eligible for a $7,500 US federal EV tax credit.

5. Tesla has been one of the most talked about stocks since 2020, but is currently down -64% since the start of 2022 due to Insider trading, demand and margin worries.

6. Analyst wrote that the report was “better than feared.”

7. Elon Musk is trying to convince Tesla investors that Twitter isn’t a problem, despite the fact that Tesla shareholders have seen their stock price plummet since he bought the blue bird app last year.

8. Tesla is investing $3.6 billion to expand its Nevada Semi truck factory, which will produce high volumes of semi-trucks and make enough cell batteries for 2 million light-duty vehicles annually.

Score: 7/8, everything except the point on Bitcoin was captured.

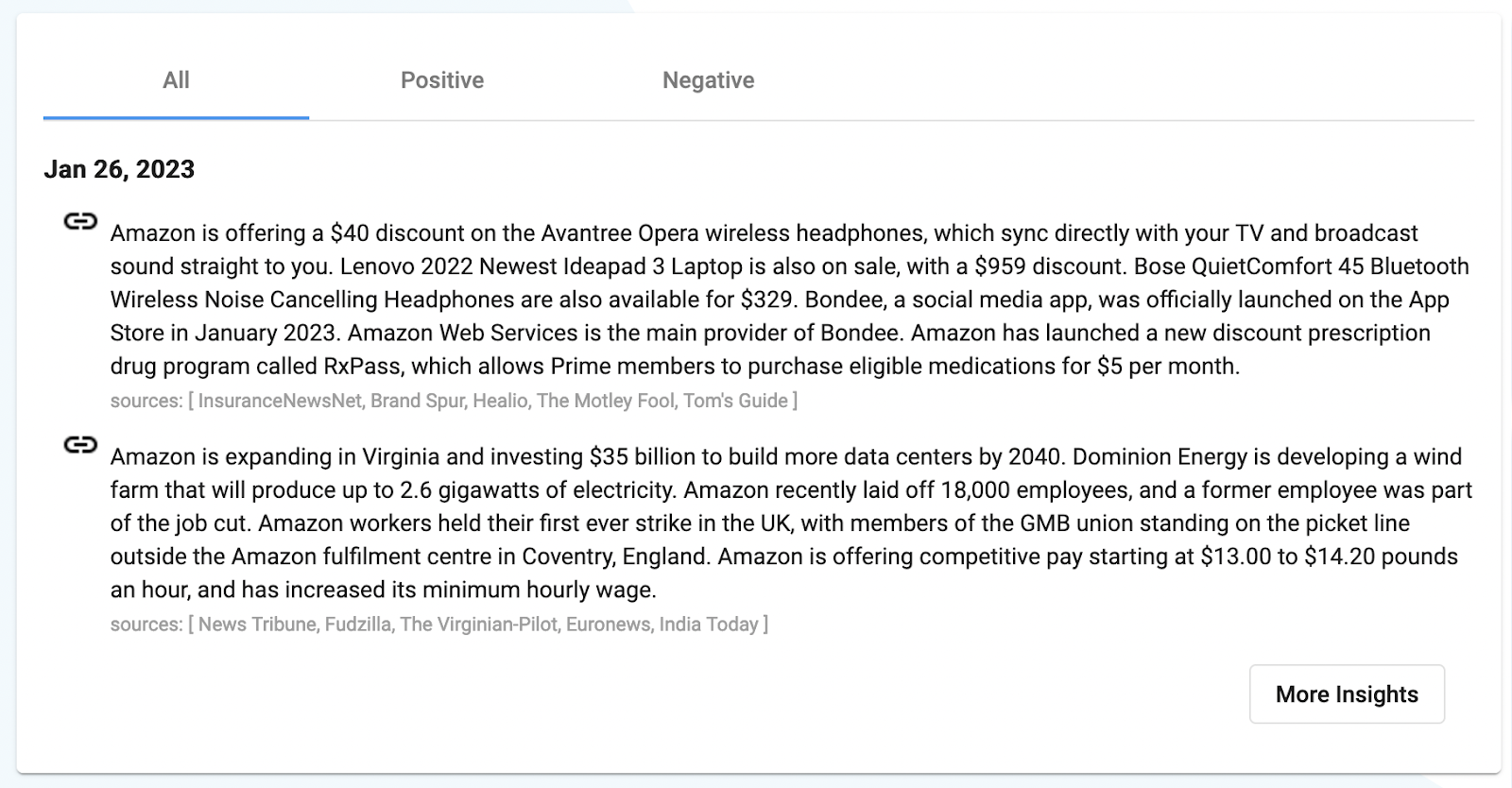

Example 2, Amazon:

Photon Insights:

Human generated core points from all articles:

1. Amazon recently laid off 18,000 employees, which was more than expected. A former employee was part of the company’s job cut, and the process felt “extremely robotic” to her.

2. Amazon is having difficulty in its battle against its UK employees, as it is finding it tougher to break UK unions than its usual union stomping ground, the US.

3. Workers at Amazon’s Coventry depot recently staged a historic strike to demand pay of 15 an hour and to be recognised by a union.

4. Amazon Prime Gaming is offering a variety of free games for February 2023

5. Amazon is expanding in Virginia, and a Hampton Roads agency is looking to land a data center. The company is investing $35 billion to build more data centers by 2040.

6. An Amazon Warehouse worker has made shocking claims about the unjust treatment of employees, claiming that the company treats its robots better than its human staff.

7. Amazon has announced the launch of the RxPass, a subscription-based benefit that allows Prime members to order eligible medications for a flat, low fee of $5 per month.

Score: 5/7, 1. Missed Amazon Prime Gaming (this is subjective as whether important), 2. Could have added the harsh conditions for employees (point 6).

Example 3, Google:

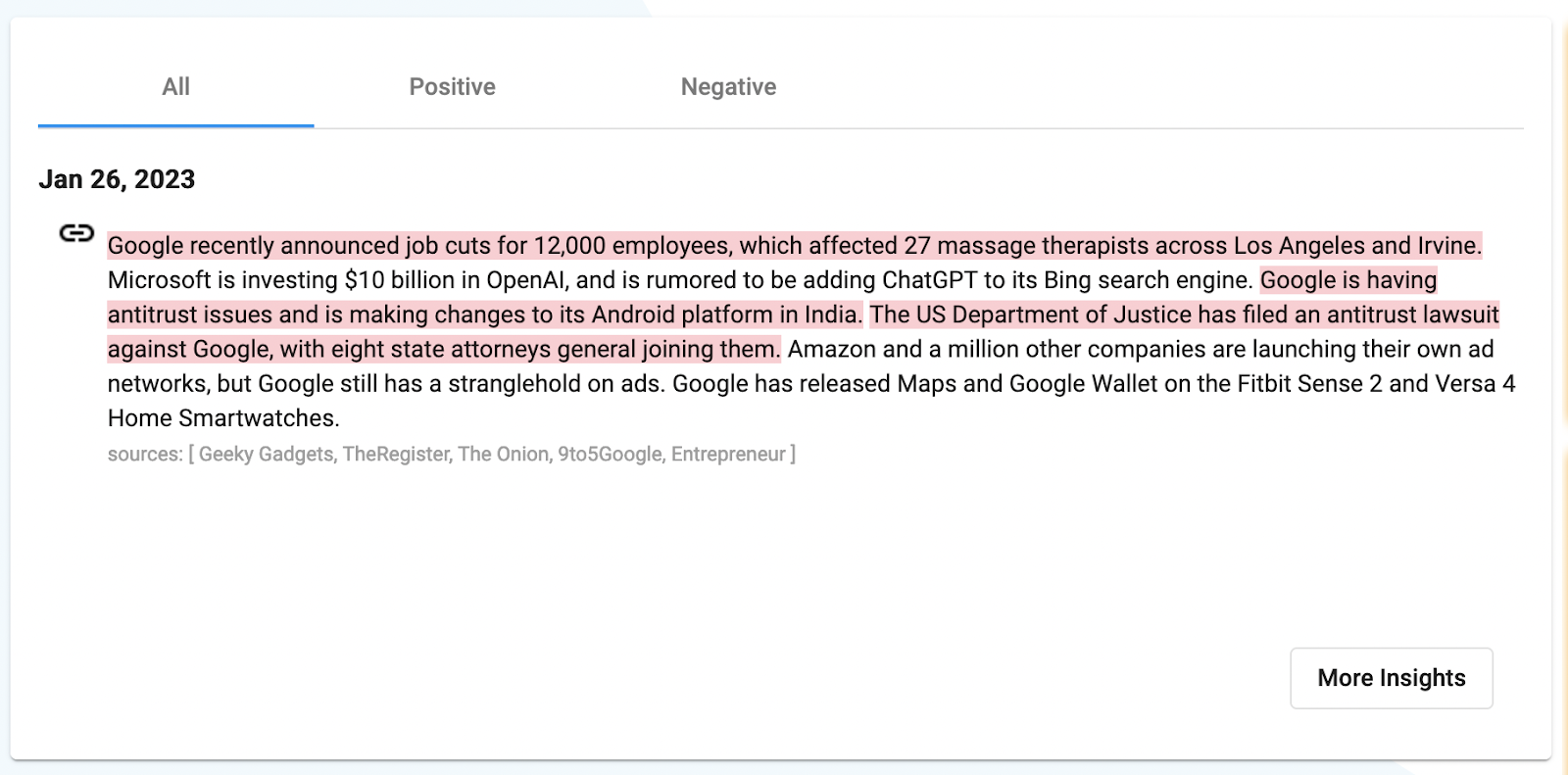

Photon Insights:

Human generated core points from all articles:

1. Google recently acquired Liist, an app that allowed users to save places they see in Instagram and TikTok.

2. The Justice Department has accused Google of an advertising monopoly, and the company has been sued by the Department of Justice and eight states.

3. Google was ordered to pay $161 million, but lost its appeal against the ruling. India’s Supreme Court found Google’s appeal against monopoly fines unappealing.

4. This week, Google announced plans to cut 12,000 employees due to a slowdown in its revenue growth.

5. AI is becoming increasingly popular, with Google may be only a year or two away from total disruption.

Score: 4/5, Got everything except the acquisition news but for a company of this scale it might be fine to ignore this and it was reported only in one of the news articles.

Hopefully these three examples help illustrate the difficulty of assessing the frameworks due to the subjectivity of tasks, but also provide confidence that Photon is seemingly obtaining the essence plus also links back to original sources in case we missed anything.

Thanks for reading, and please don’t hesitate to contact us if you have any questions!